2 September 2019

One of the exciting things about modern technology is that the hype often becomes reality – and that could be the case with Artificial Intelligence.

It aims to mimic human intelligence, which is the ability to acquire and apply knowledge and skills. It’s not yet clear how the human brain actually does this – how we learn, think and remember things – but we do know that the vast interconnections of neurons in the brain play a part.

Existing AI shows that it’s possible to emulate some aspects of intelligence by feeding data into a computer and using sophisticated algorithms to find patterns. That’s then used to make predictions – which is the process known as Machine Learning. The most advanced AI algorithms use an ML approach called Deep Learning to perform human-like tasks such as:

· Image recognition, face recognition and face detection

· Speech recognition, natural language processing (NLP), natural language understanding (NLU) and natural language generation (NLG)

· Handwriting recognition and generation

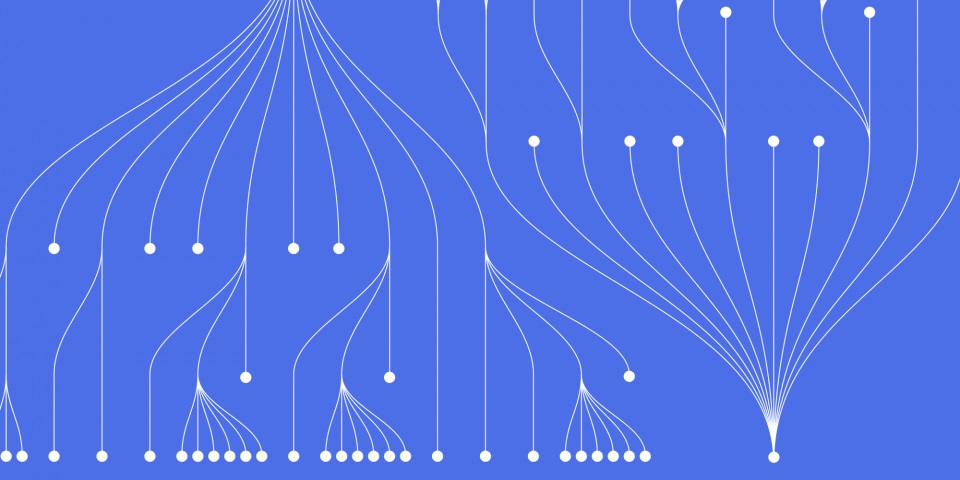

DL uses multiple layers of artificial neural networks (ANNs) – mathematical models loosely based on the brain’s neural networks – to achieve these tasks. Deep neural networks currently in use include convolutional neural networks (CNNs), long-short-term-memory (LSTM) neural networks and capsule networks (CapsNet).

The current reality

Let’s look at image recognition as an example of AI in use today. Google Photos – the photo sharing and storage app – automatically analyses photos to identify visual features and subjects. It uses a large-scale CNN that was developed using TensorFlow. The image is fed into the CNN as a 2D array of pixels, with each pixel having an RGB or greyscale value. Nodes in the input layer receive this data and transmit it to connected nodes in hidden layers, which alternate between convolutional and pooling/subsampling layers. The result is a deep abstract representation of the image at the output layer.

Speech recognition is another example of AI that uses Deep Learning technology. Sound waves – represented as a spectrogram – are fed into a LSTM deep neural network. It can recognise the sequences of inputs – the spatial-temporal signals – and learn to map the spectrogram feeds to words. Alexa and Google Assistant use deep neural networks for speech recognition.

The future reality?

AI today can do a number of specific tasks extremely well, although it relies heavily on carefully curated data and complex algorithms. It is still no match for human intellect or general intelligence. It also doesn’t pass the Turing test, where ‘a machine could be said to think if its responses to questions could not be distinguished from those of a human’.

But the point is this – AI and ML learn with experience and perform better over time. So if they are tasked with developing their own applications, it is arguably just a matter of time before they perform as well as – or better – than us.

Here at JBI Training, we provide a range of exceptional training courses on AI and ML including:

· Data Science and AI/ML (Python) training course (5 days) where you learn the core concepts of Python and how to apply it to AI applications – See our Data Science and AI/ML (Python) training course outline

· TensorFlow training course (3 days) where you learn to use this Google open source software library for numerical computation using data flow graphs – See our TensorFlow training course outline

· AI for Business and IT Staff training course (1 day) where you learn about the possibilities offered by AI as well as the terminology and market forces – See our AI for Business and IT Staff training course outline.

CONTACT

+44 (0)20 8446 7555

Copyright © 2025 JBI Training. All Rights Reserved.

JB International Training Ltd - Company Registration Number: 08458005

Registered Address: Wohl Enterprise Hub, 2B Redbourne Avenue, London, N3 2BS

Modern Slavery Statement & Corporate Policies | Terms & Conditions | Contact Us

POPULAR

AI training courses CoPilot training course

Threat modelling training course Python for data analysts training course

Power BI training course Machine Learning training course

Spring Boot Microservices training course Terraform training course